How To Install Spark and Pyspark On Centos

Lets check the Java version.

java -version openjdk version "1.8.0_232" OpenJDK Runtime Environment (build 1.8.0_232-b09) OpenJDK 64-Bit Server VM (build 25.232-b09, mixed mode)

We have the latest version of Java available.

How to install Spark 3.0 on Centos

Lets download the Spark latest version from the Spark website.

wget http://mirrors.gigenet.com/apache/spark/spark-3.0.0-preview2/spark-3.0.0-preview2-bin-hadoop3.2.tgz

Lets untar the spark-3.0.0-preview2-bin-hadoop3.2.tgz now.

tar -xzf spark-3.0.0-preview2-bin-hadoop3.2.tgz

ln -s spark-3.0.0-preview2-bin-hadoop3.2 /opt/spark

ls -lrt spark

lrwxrwxrwx 1 root root 39 Jan 17 19:55 spark -> /opt/spark-3.0.0-preview2-bin-hadoop3.2

Lets export the spark path to our .bashrc file.

echo 'export SPARK_HOME=/opt/spark' >> ~/.bashrc

echo 'export PATH=$SPARK_HOME/bin:$PATH' >> ~/.bashrc

source your ~/.bashrc again.

source ~/.bashrc

We can check now if Spark is working now. Try following commands.

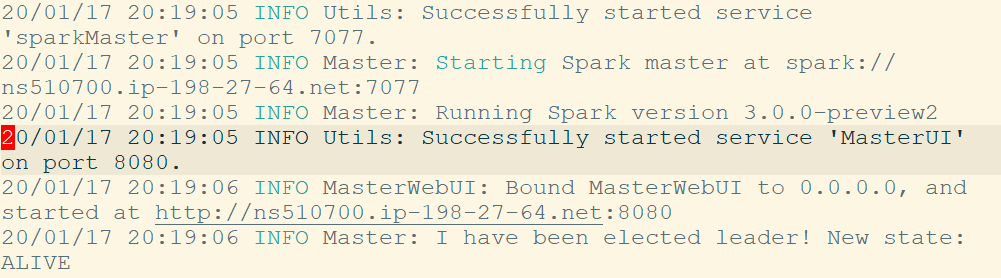

$SPARK_HOME/sbin/start-master.sh

starting org.apache.spark.deploy.master.Master, logging to /opt/spark/logs/spark-root-org.apache.spark.deploy.master.Master-1-ns510700.out

If successfully started, you should see something like shown in the snapshot below.

How to install PySpark

Installing pyspark is very easy using pip. Make sure you have python 3 installed and virtual environment available. Check out the tutorial how to install Conda and enable virtual environment.

pip install pyspark

If successfully installed. You should see following message depending upon your pyspark version.

Successfully built pyspark Installing collected packages: py4j, pyspark Successfully installed py4j-0.10.7 pyspark-2.4.4

One last thing, we need to add py4j-0.10.8.1-src.zip to PYTHONPATH to avoid following error.

Py4JError: org.apache.spark.api.python.PythonUtils.getEncryptionEnabled does not exist in the JVM

Lets fix our PYTHONPATH to take care of above error.

echo 'export PYTHONPATH=$SPARK_HOME/python:$SPARK_HOME/python/lib/py4j-0.10.8.1-src.zip' >> ~/.bashrc

source ~/.bashrc

Lets invoke ipython now and import pyspark and initialize SparkContext.

ipython

In [1]: from pyspark import SparkContext

In [2]: sc = SparkContext("local")

20/01/17 20:41:49 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

If you see above screen, it means pyspark is working fine.

Thats it for now!